Meta Refuses Removal After Police Officer Reports Deepfake Investment Ad Featuring Indian President Murmu

Meta allegedly declined to remove a deepfake advertisement that falsely portrayed Indian President Droupadi Murmu endorsing a fraudulent investment scheme. The content was formally reported by a senior Indian Police Service officer from Andhra Pradesh Police but was left online, raising questions about the platform’s content moderation standards.

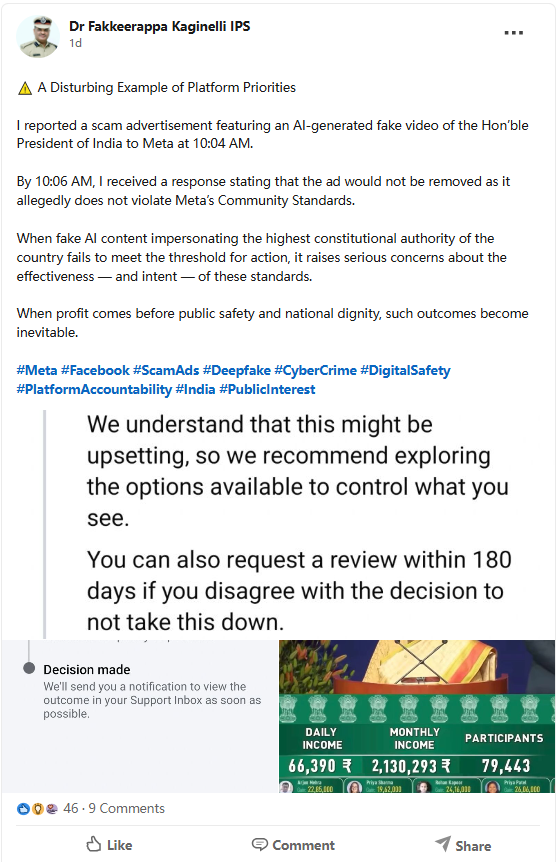

Dr Fakkeerappa Kaginelli publicly flagged the issue in a LinkedIn post, detailing how the misleading Facebook advertisement was reported to Meta at 10:04 AM. By 10:06 AM, Meta responded that the video would not be taken down, claiming it did not violate Community Standards.

Fakkeerappa Kaginelli’s LinkedIn post regarding Meta's refusal to take down a fraudulent ad

The AI‑generated video, already circulated widely, was strategically posted on India’s Republic Day, a moment of heightened public attention around the President. In the video, President Murmu is falsely shown promoting a lucrative investment opportunity.

Cybercrime investigators note that scammers often exploit national holidays and trending figures to maximise reach and credibility.

BrokersView Reminds You

Deepfake investment scams are escalating worldwide. Fraudsters increasingly use AI‑generated content to forge endorsements and impersonate trusted leaders, regulators, and financial institutions.

Investor vigilance remains the strongest defense against these evolving fraud tactics, as Meta’s ongoing lapses in addressing fraudulent ads across its social media platforms.

In November 2025, internal Meta documents showed that roughly 10% of its 2024 revenue, about $16 billion, came from fraudulent ads. The revelation prompted U.S. Senators Richard Blumenthal and Josh Hawley to urge federal regulators to investigate Meta.

Read more deepfake scam cases:

- Alert: Deepfake Video Falsely Shows Finance Minister and Ex‑RBI Governor Endorsing ₹1 Million Monthly Return Scam

- Canadian Senior Loses Savings to AI-Generated Deepfake Scam, Highlighting Expanding Fraud Problem

- Deepfake Scam Hits Malta: AI Videos and Fake News Exploit Maltese Ministers in Fraudulent Scheme NethertoxAGENT